3 APRIL 2025

PLEASE NOTE THAT OWING TO COPYRIGHT OR INTELLECTUAL PROPERTY PERMISSIONS WE ARE UNABLE TO SHARE RECORDINGS OF SOME SESSIONS

VIDEO: DAY 1 SUMMARY: Chapa Sirithunge (University of Cambridge, UK)

VIDEO: DAY 2, MORNING SESSION SUMMARY, Perla Maiolino (University of Oxford, UK)

VIDEO: DAY 2 CLOSING PANEL DISCUSSION

PLENARY TALKS

HIROKI UEDA (RIKEN & University of Tokyo)

VIDEO: WHY DO WE SLEEP? THE ROLE OF CALCIUM AND PHOSPHORYLATION IN SLEEP.

Abstract: Sleep is one of the great mysteries of life—why do we need it, and how does our brain regulate it? In 2012, at the Sleep 2012 conference in Boston, we reached to a new way of thinking about sleep regulation. Instead of relying on the idea that sleep is triggered by specific “sleep substances,” we suggested that the brain might monitor wakefulness substances, such as calcium, to determine when sleep is needed. This idea led us to explore the role of calcium in sleep. Building on Dr. Setsuro Ebashi’s discovery that calcium acts as a key signalling molecule in cells, we hypothesized that calcium might not only activate neurons but also help regulate sleep. To test this, we developed a powerful gene-editing technique called the Triple-CRISPR method in 2016, which allowed us to create genetically modified mice with over 95% efficiency. Using this technique, we studied 25 different genes related to calcium channels and pumps and found that calcium plays a crucial role in sleep by acting as a brake on brain activity.

We also developed CUBIC, a method that makes brain tissue transparent, allowing us to see how calcium affects neurons. Our research revealed that calcium-dependent proteins, such as CaMKIIα/β, store a “memory” of calcium activity and use it to regulate sleep. We also identified three specific sites on these proteins that control when sleep begins, how long it lasts, and when it ends. Additionally, we found that certain enzymes, such as PKA, PP1, and Calcineurin, act as sleep switches—some keeping us awake, while others help us sleep. Our discoveries led to a new sleep model called WISE (Wake Inhibition Sleep Enhancement), which challenges the traditional SHY (Synaptic Homeostasis) hypothesis. While SHY suggests that sleep weakens unnecessary brain connections, WISE suggests that quiet wakefulness suppresses neuronal connections, while deep sleep strengthens them. This model also helps explain why chronic sleep deprivation can contribute to depression and why some fast-acting antidepressants increase deep sleep activity.

By understanding how calcium and brain activity regulate sleep, we hope to unlock new ways to improve sleep health and develop better treatments for sleep disorders and mental health conditions.

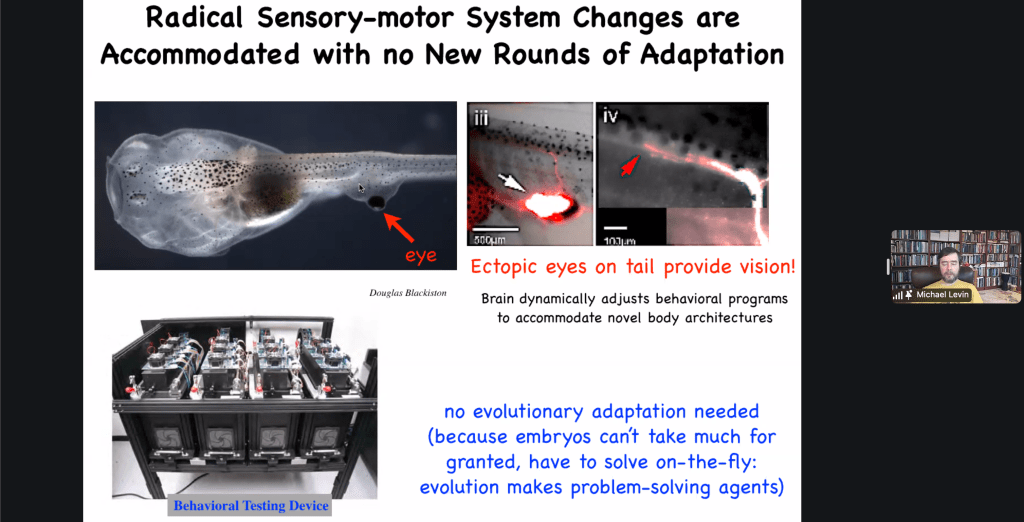

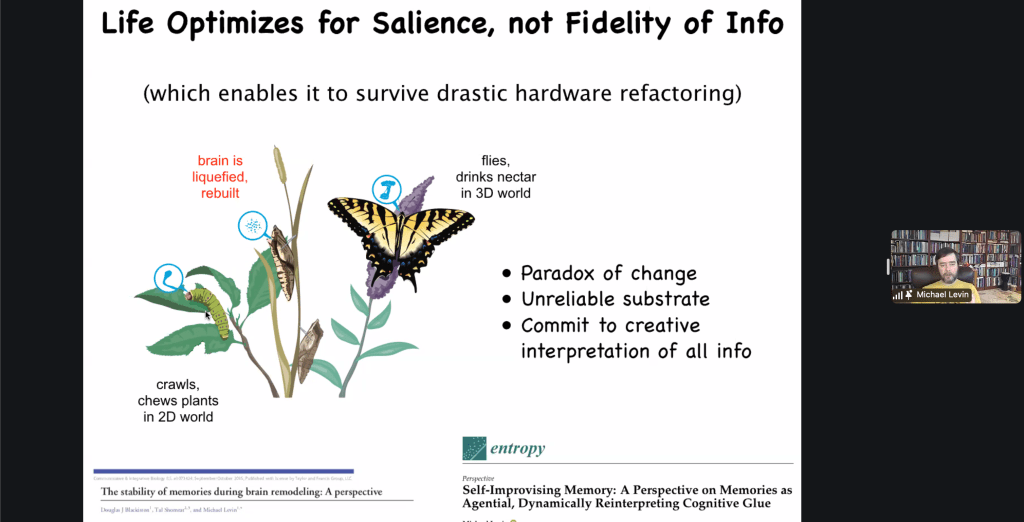

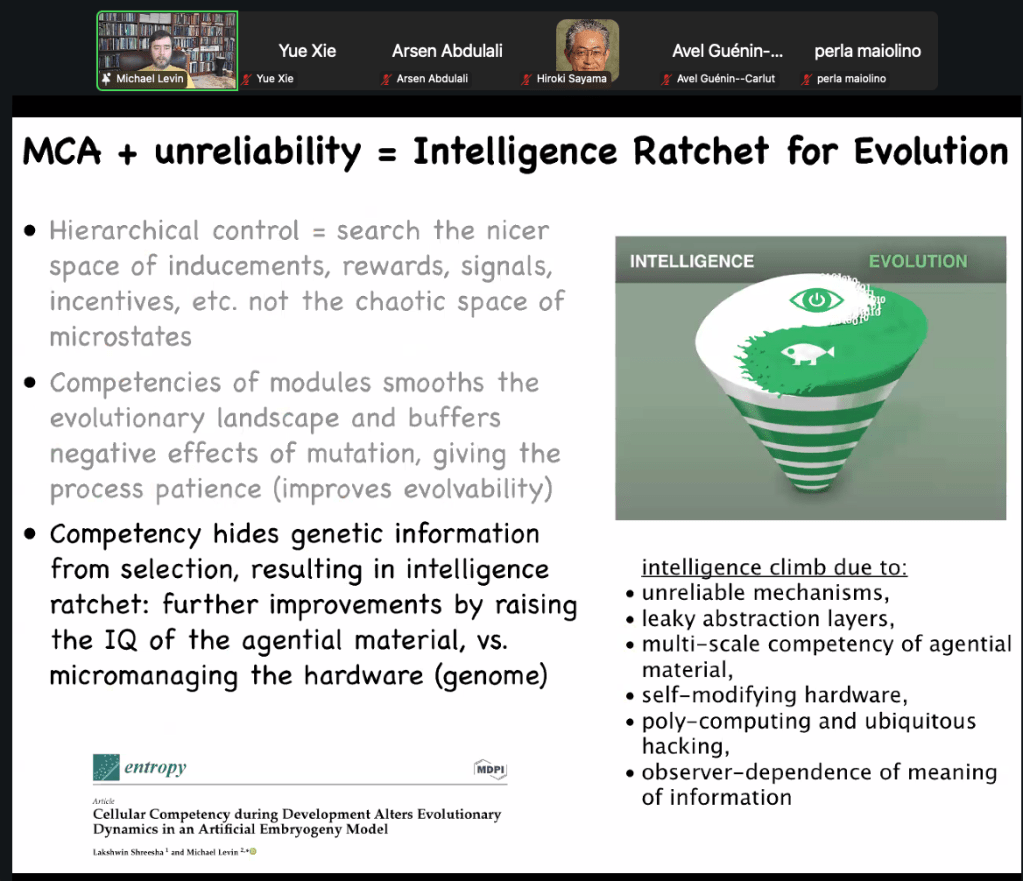

MICHAEL LEVIN (Tufts University, USA)

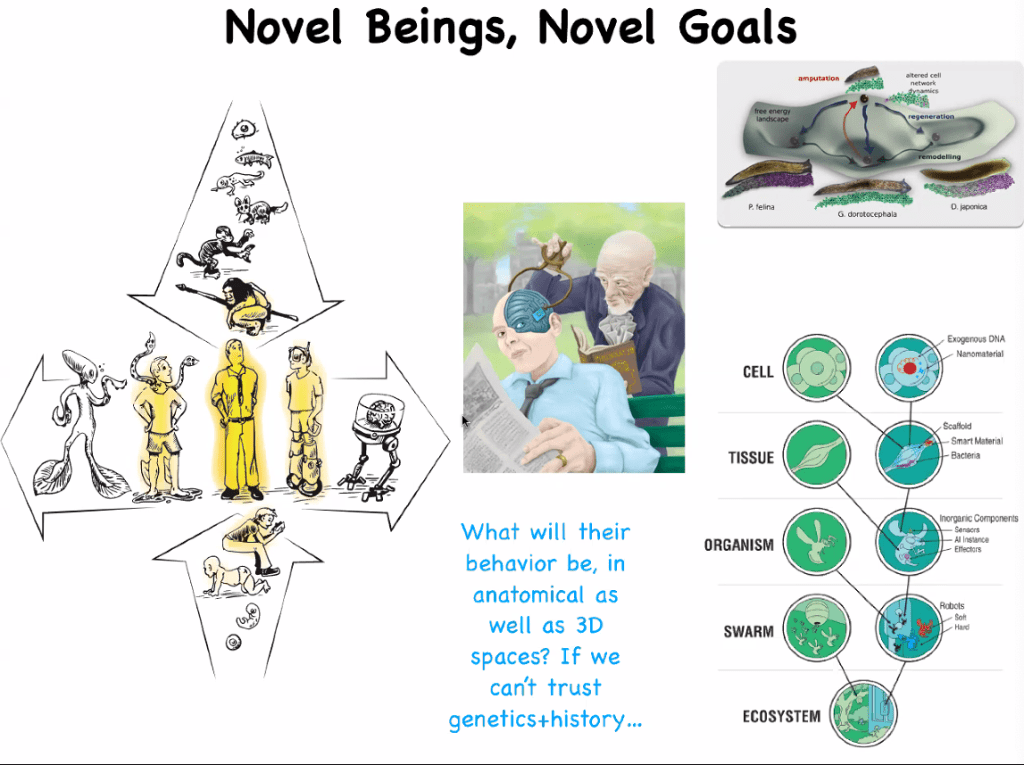

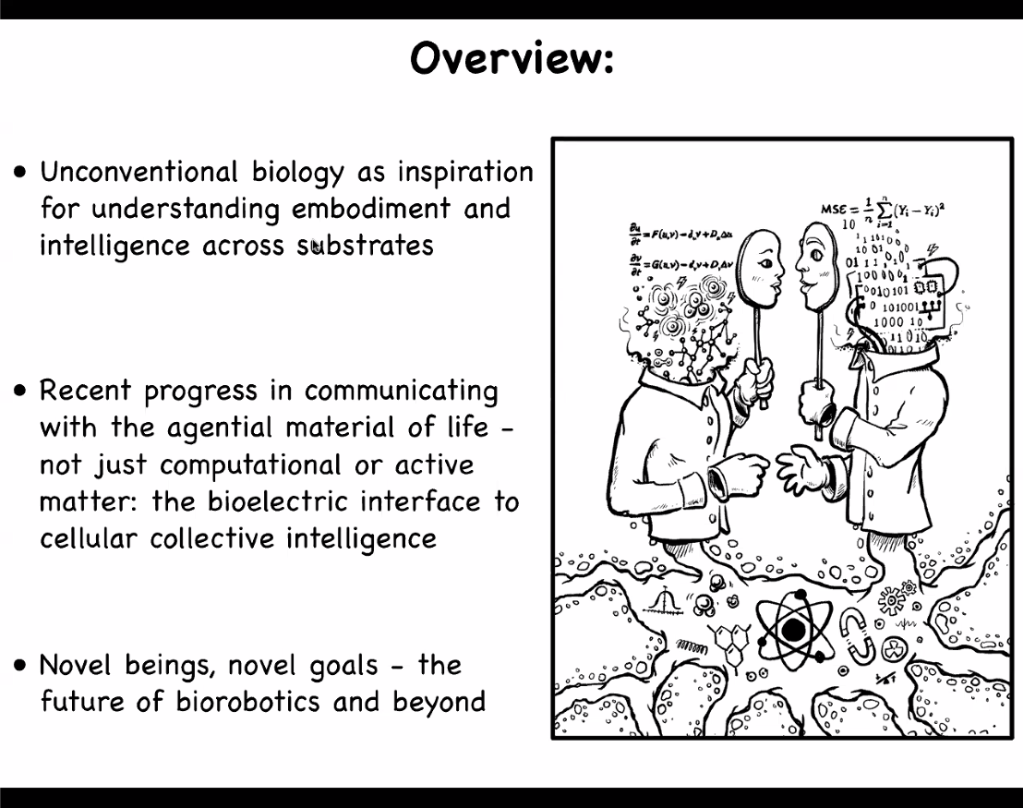

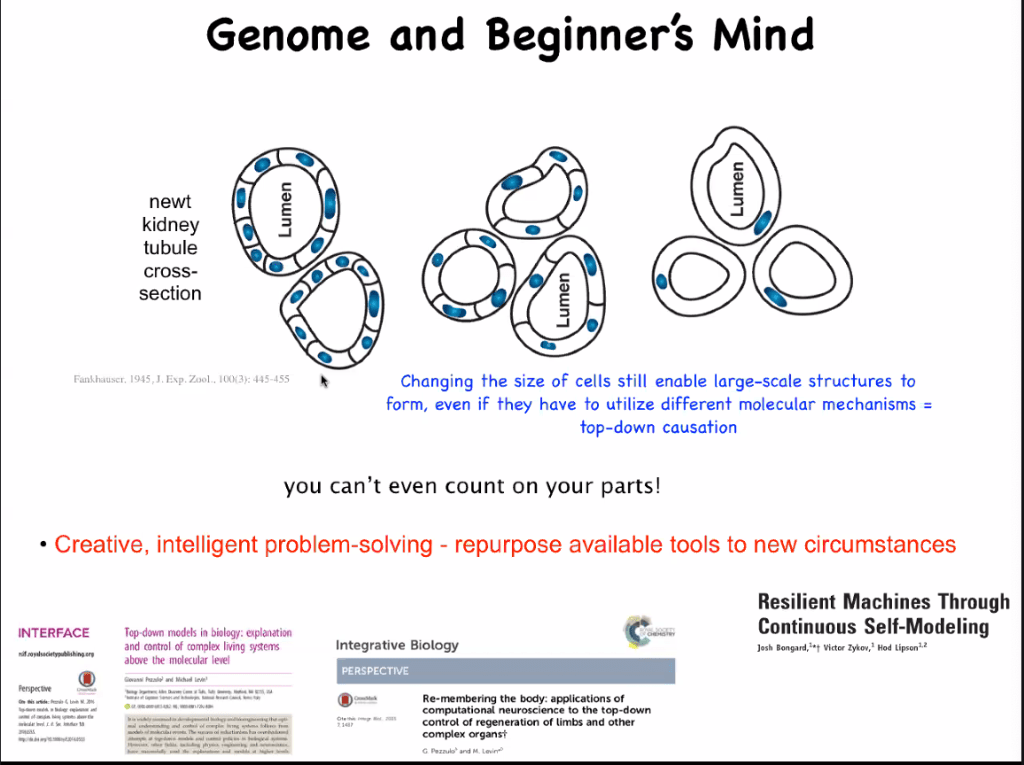

VIDEO: DIVERSE INTELLIGENCE IN UNCONVENTIONAL SUBSTRATES: LIFE AND BEYOND

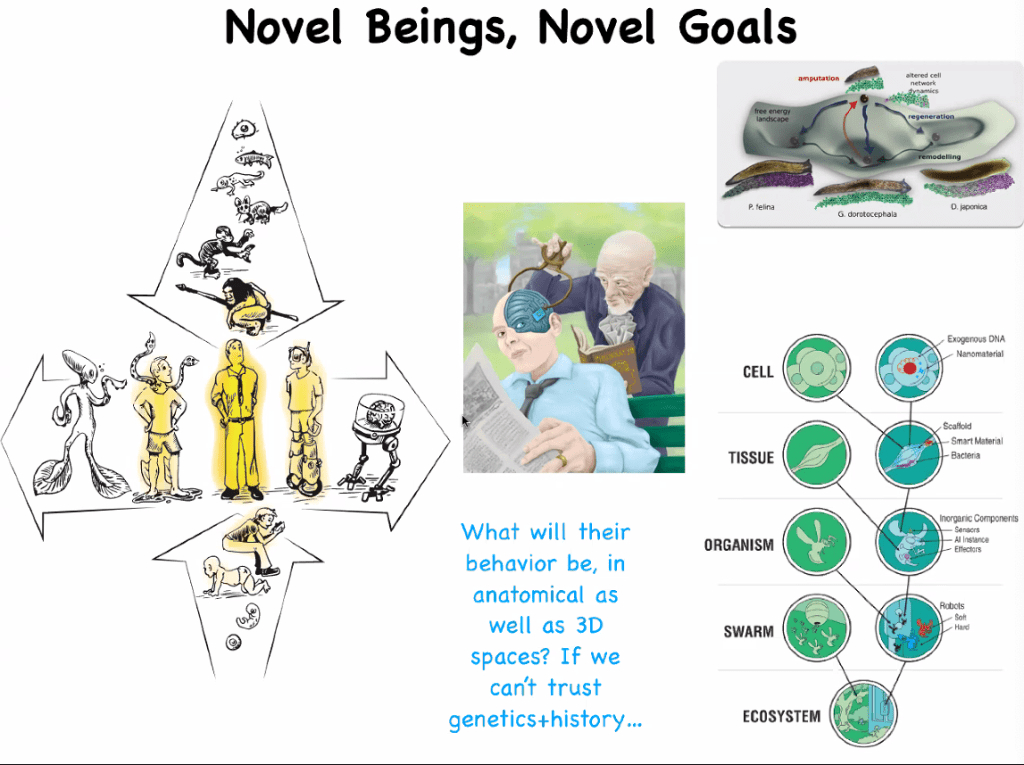

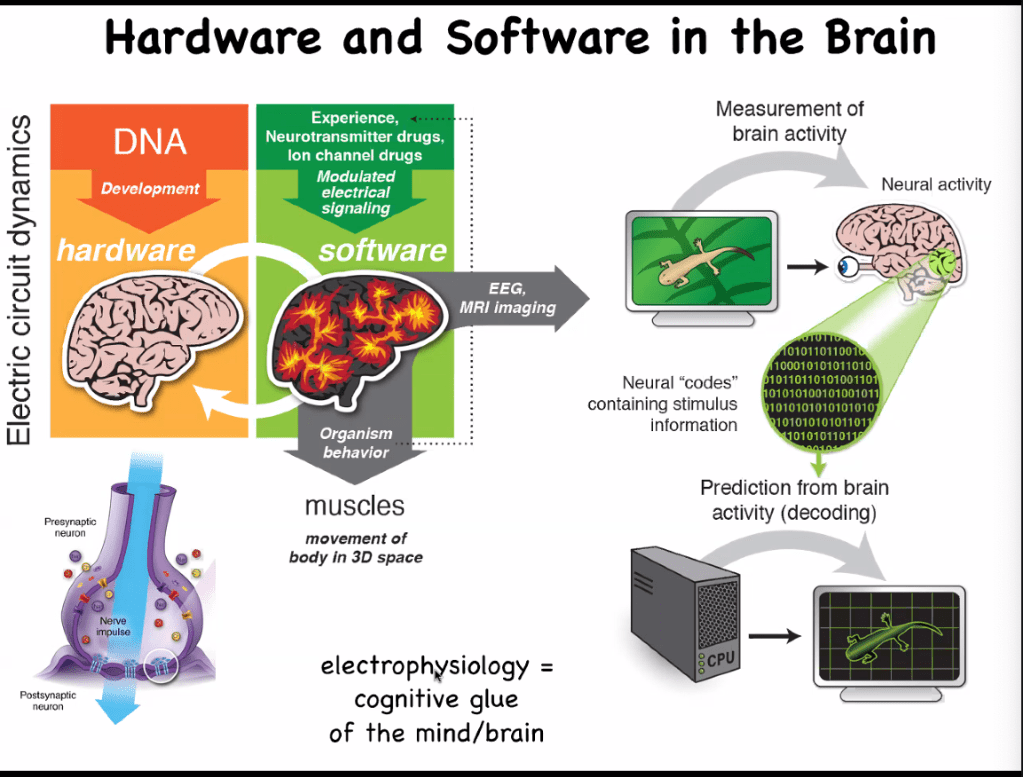

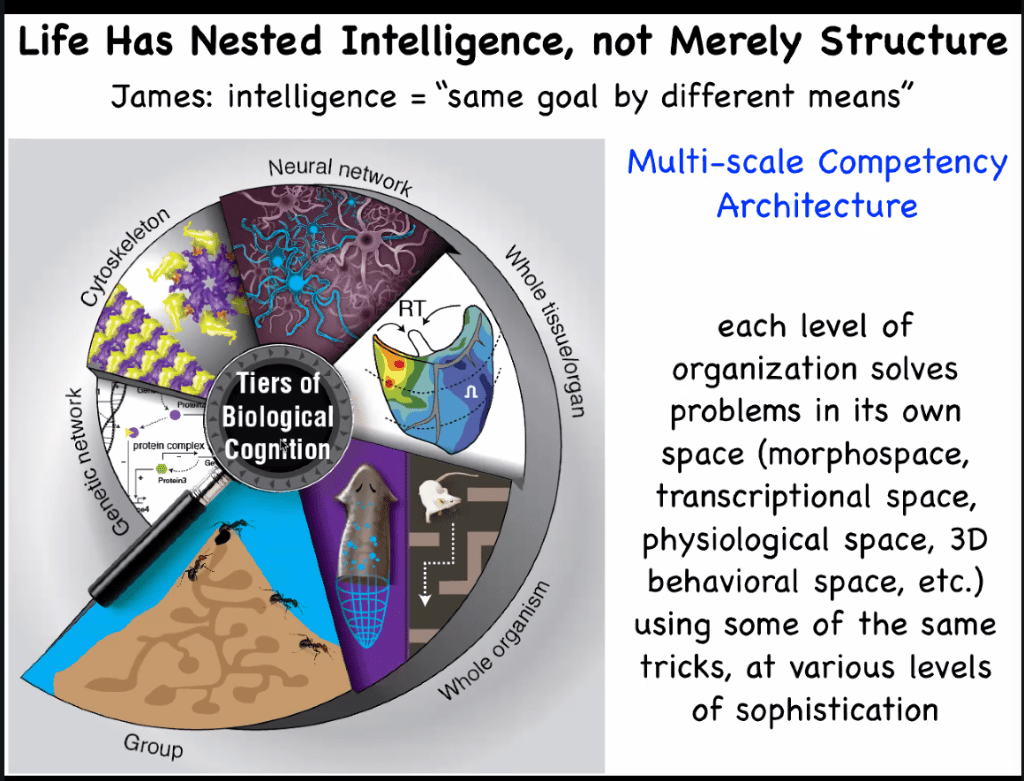

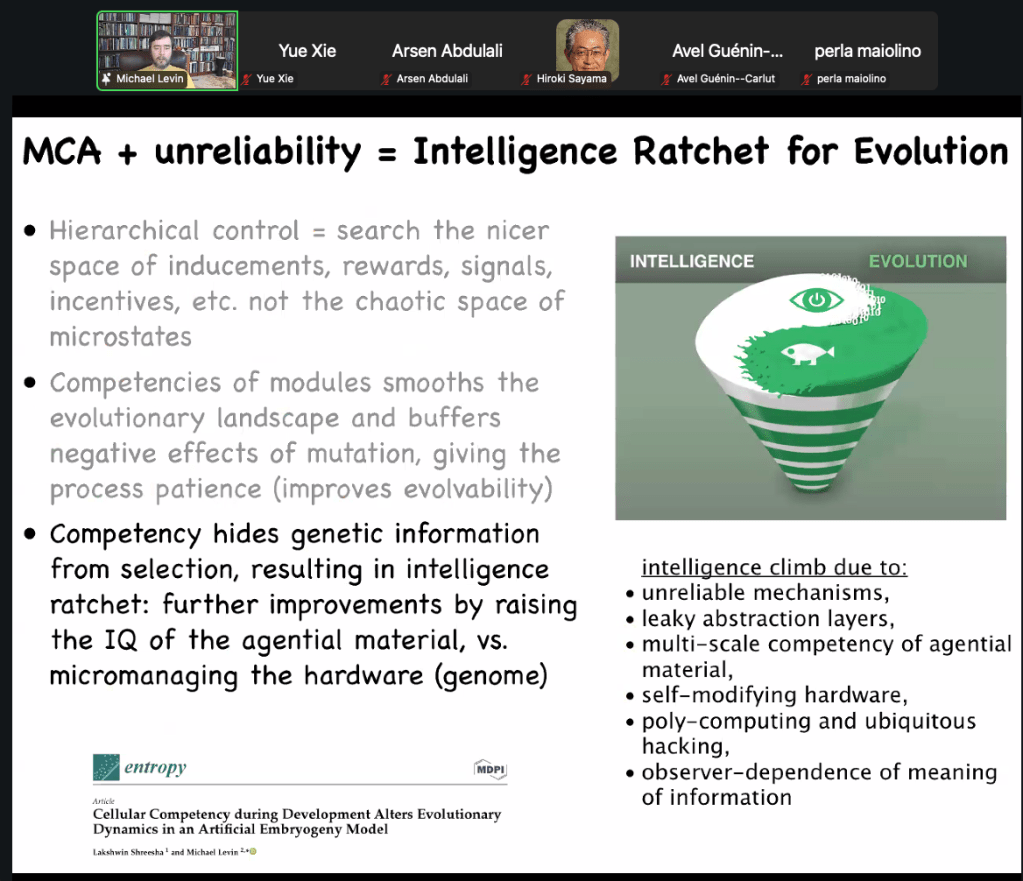

Abstract: My group uses the tools of computational and cognitive science to analyze unconventional intelligence. We study how molecular networks, cells, and tissue-level structures solve problems in physiological and anatomical problem spaces. In this talk, I will show the methods we have developed to use the bioelectric interface to communicate with the collective intelligence of cells, which gives rise to novel applications in regenerative medicine and the bioengineering of synthetic life forms. I will also describe some deep principles of the multiscale competency architecture of life, and the ways in which biological intelligence is similar and different from the computational architectures of today’s AI. This talk will focus on the plasticity and reprogrammability of the agential material of life, and the implications for a very wide option space of embodied minds.

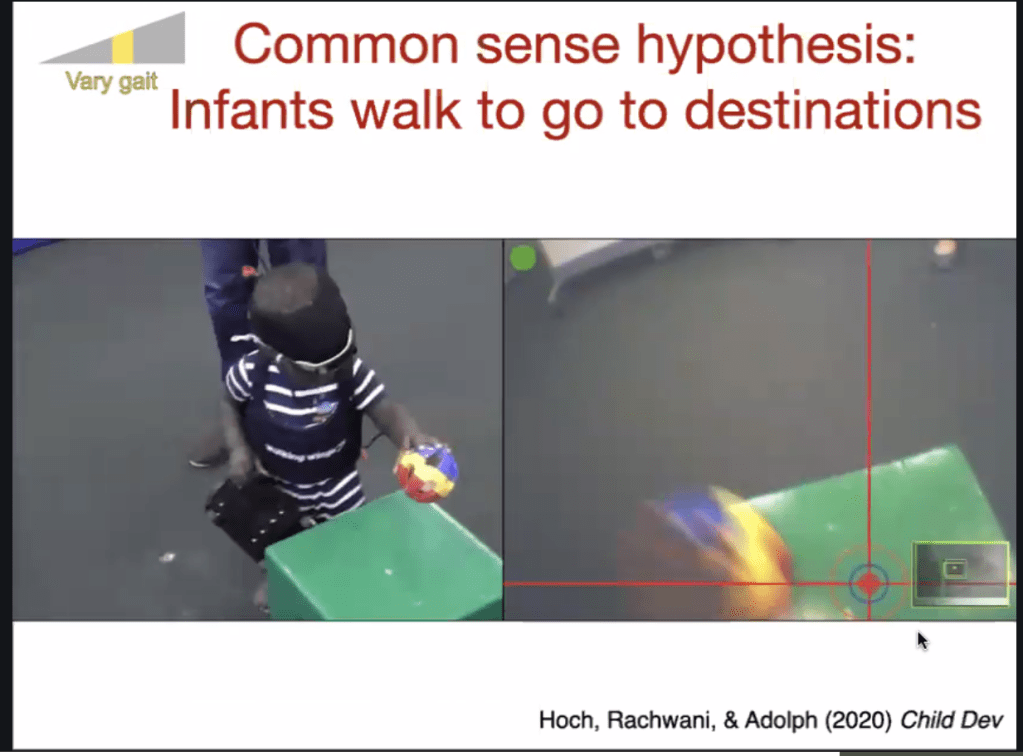

KAREN ADOLPH (NYU, USA)

VIDEO: LEARNING TO WALK: LESSONS FROM BABIES, BOTS, AND PIGLETS

Abstract: The average 18-month-old can run circles around the world’s best walking robots. Toddlers move their legs, maintain balance, and produce varied gait patterns. But so can robots and precocial animals (e.g., piglets that walk at birth). However, toddlers also explore the terrain ahead to gather perceptual information about affordances for locomotion, so infants can prospectively plan which movements to do and how and when to do them. And when walking is impossible, infants find alternative locomotor strategies or avoid going entirely. In contrast, precocial animals cannot perceive affordances without sufficient postnatal experiences, and robot locomotion is largely reactive rather than prospective. Why do babies beat bots when it comes to navigation in the real world? Babies’ prowess is not due to better “engineering” (babies’ bodies are built more for falling than for walking). Instead, infants’ functional intelligence arises from “learning algorithms” that capitalize on links between perception and action, down-weight errors, and reward effort regardless of whether movements achieve immediate goals. Moreover, developmental “training curricula” comprise immense amounts of time-distributed, variable practice—with input attuned to infants’ just-right level for learning. Indeed, simulated robot models suggest that infants’ natural practice regimen—replete with variability and errors—is optimal for building a flexible behavioral system to respond adaptively to the constraints and opportunities of an ever-changing world. Open video sharing will speed progress toward understanding behavior and its development and provide a roadmap for progress in artificial intelligence.

MORNING SHORT TALKS (VIDEOS)

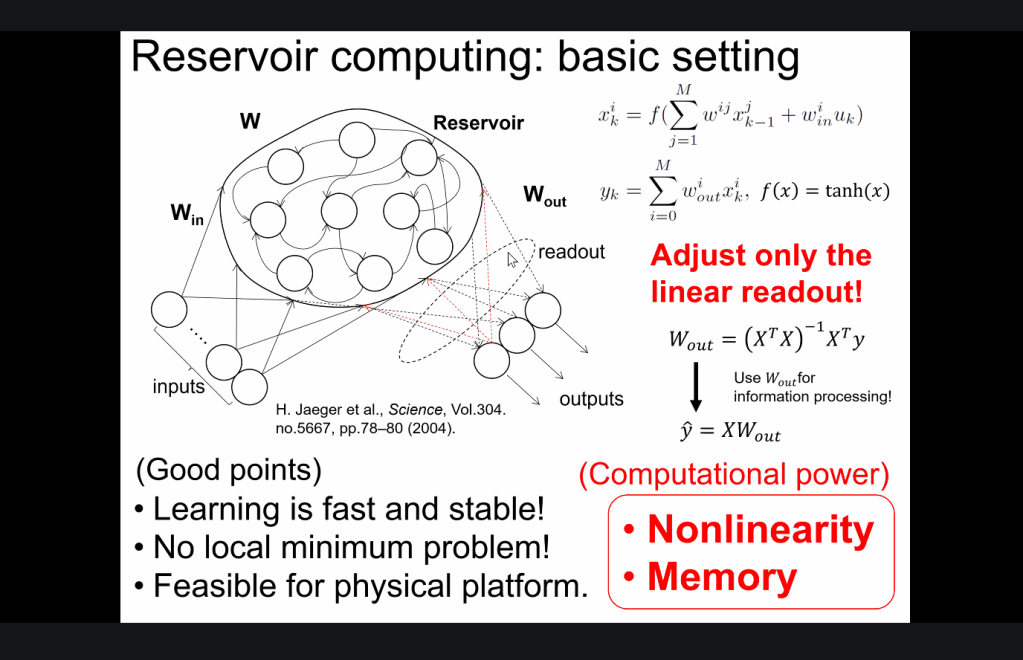

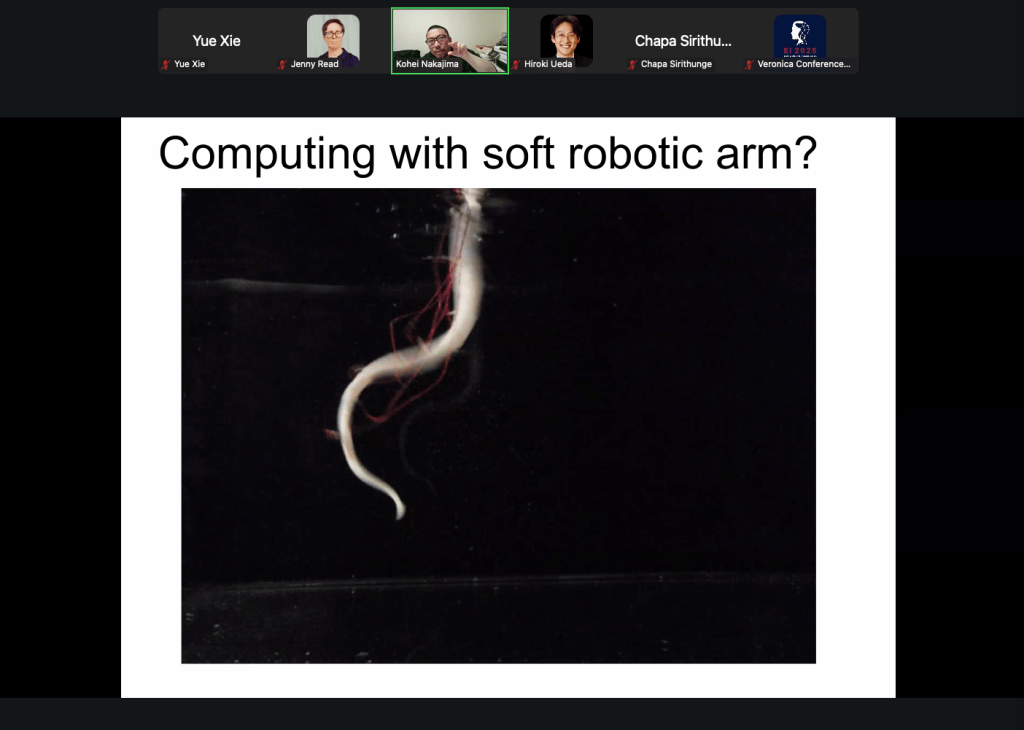

- Kohei Nakajima: DEEP LEARNING THROUGH PHYSICAL DYNAMICS

- Abstract: Embodiment has always been an important topic to consider when form meets the physical world. Physical deep learning (PDL), which is a framework for implementing deep learning into physical substrates, is an area where embodiment has recently taken a critical role. In this talk, we show that PDL is essentially connected to the concept of physical reservoir computing and introduce a learning scheme based on a gradient-free approach to PDL. Finally, we report our recent approaches to how learning processes can be expressed through the physical dynamics and discuss their impacts.

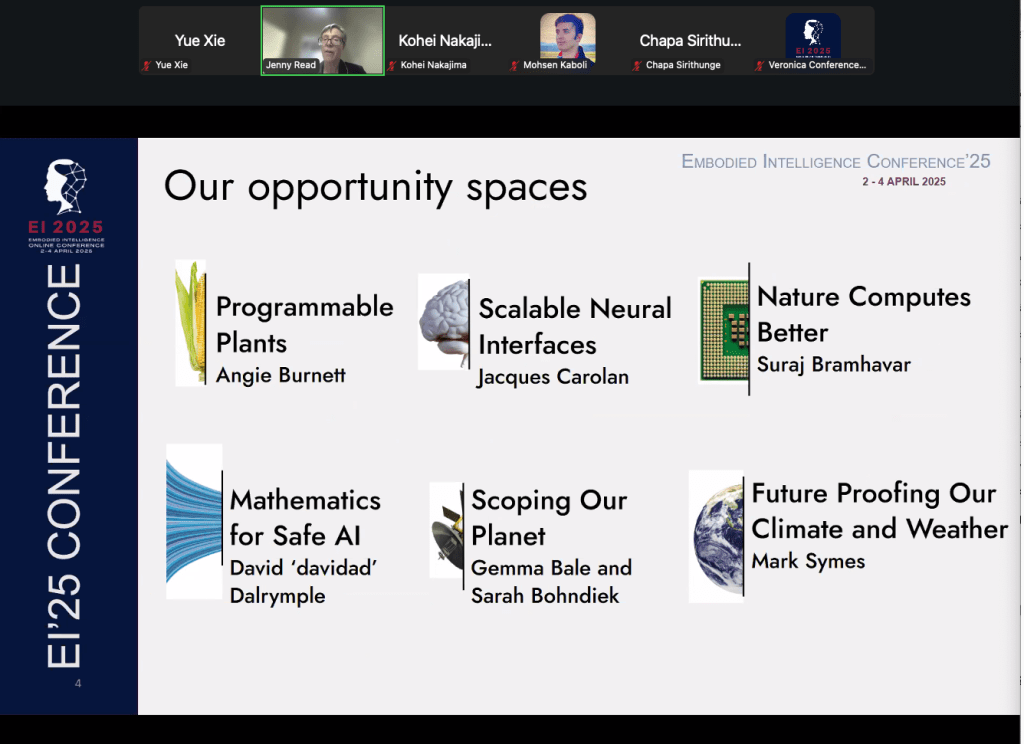

- Jenny Read: SMART MACHINES NEED SMARTER BODIES: BUILDING ARIA’S FIRST RESEARCH PROGRAMME IN ROBOTICS

- Abstract: ARIA, the Advanced Research + Invention Agency, aims to generate massive social and economic returns through empowering scientists and engineers to pursue breakthroughs at the edge of the possible. In ARIA’s model, programme directors identify opportunity spaces: areas of science or technology which are highly consequential for society; under-explored relative to their potential impact; and ripe for new talent, perspectives, or resources to change what’s possible. As an ARIA programme director, I identified robot hardware as an opportunity space. Much of the current excitement around robotics relates to advances in autonomous control following recent breakthroughs in AI. However, these advances will fairly soon bump up against the limits of what is physically possible with the available hardware. I selected robot dexterity as the target for ARIA’s first programme in this space, since dexterity is a bottleneck for so much of what we would wish robots to do for us. We are funding >£50M of research to develop new hardware components including tactile sensing and novel actuators. We are also funding a linked set of projects developing techniques to design robot bodies jointly with control algorithms, aiming to unlock morphological computing approaches and thus make robotics more capable and efficient. In this talk, I will present some of the projects we are funding and discuss the design of the portfolio.

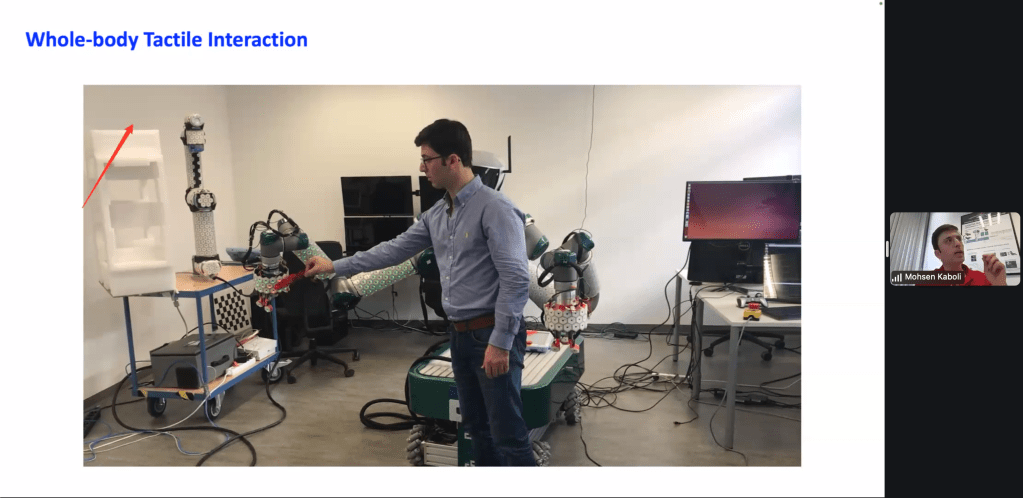

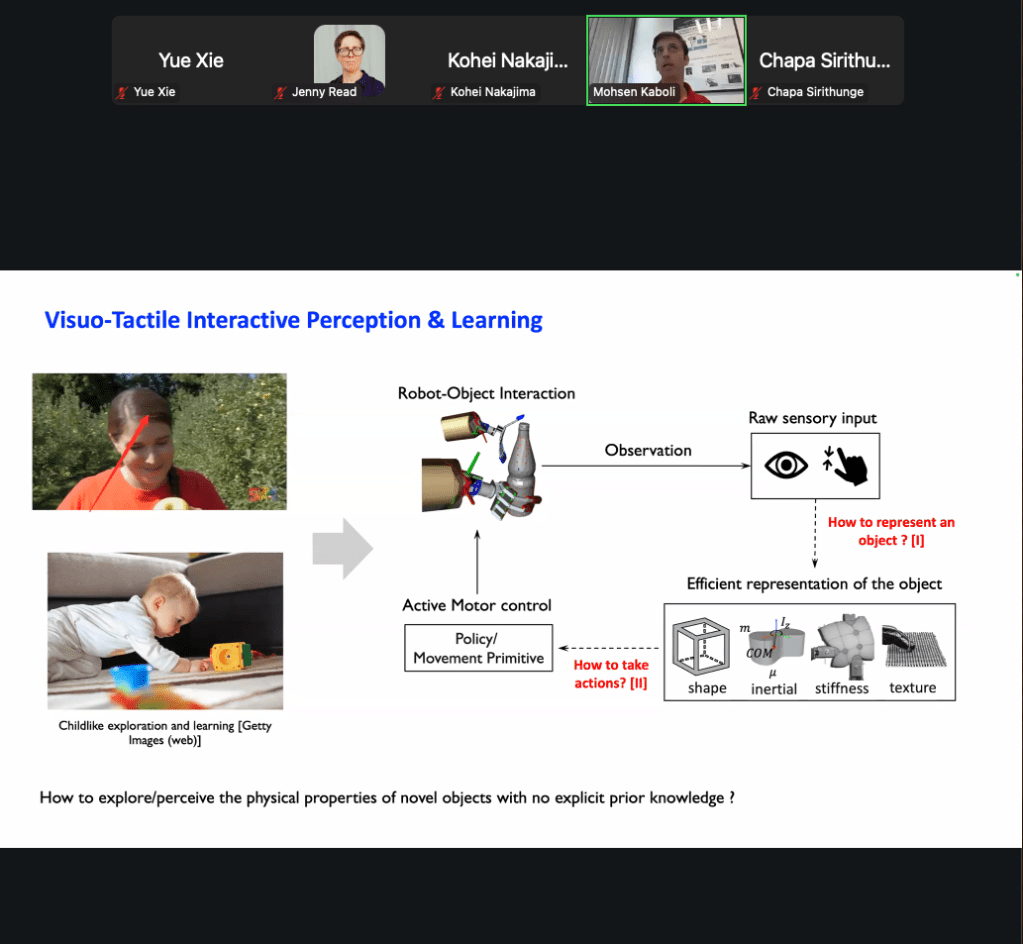

- Mohsen Kaboli: EMBODIED INTERACTIVE VISUO-TACTILE PERCEPTION AND LEARNING FOR ROBOTIC GRASP AND MANIPULATION

- Abstract: This talk explores embodied interactive visuo-tactile perception and active learning as a foundation for robotic grasping and manipulation. By integrating predictive and interactive perception, robots can actively engage with objects, refining their understanding through both visual and tactile feedback. I will discuss the role of shared visuo-tactile representations in enabling real-time adaptation, as well as visuo-tactile perception cross-modalperception for dexterous manipulation. The talk will highlight key challenges and future directions in developing robotic systems that can intelligently interact, actively learn, and generalize across diverse tasks and embodiments.

- PANEL DISCUSSION

AFTERNOON SHORT TALKS (VIDEOS)

- Matej Hoffman: ROBOTBABY – UNDERSTANDING INFANT DEVELOPMENT THROUGH BABY HUMANOID ROBOTS

- Abstract: Early sensorimotor development is fundamentally embodied. We put forth the idea that in this period, the infant brain simply accumulates a patchwork of sensorimotor skills or “body know-how” rather than building explicit models of the world or the body. We use baby humanoid robots as embodied computational models to shed light on the mechanisms of the development of the “sensorimotor self”.

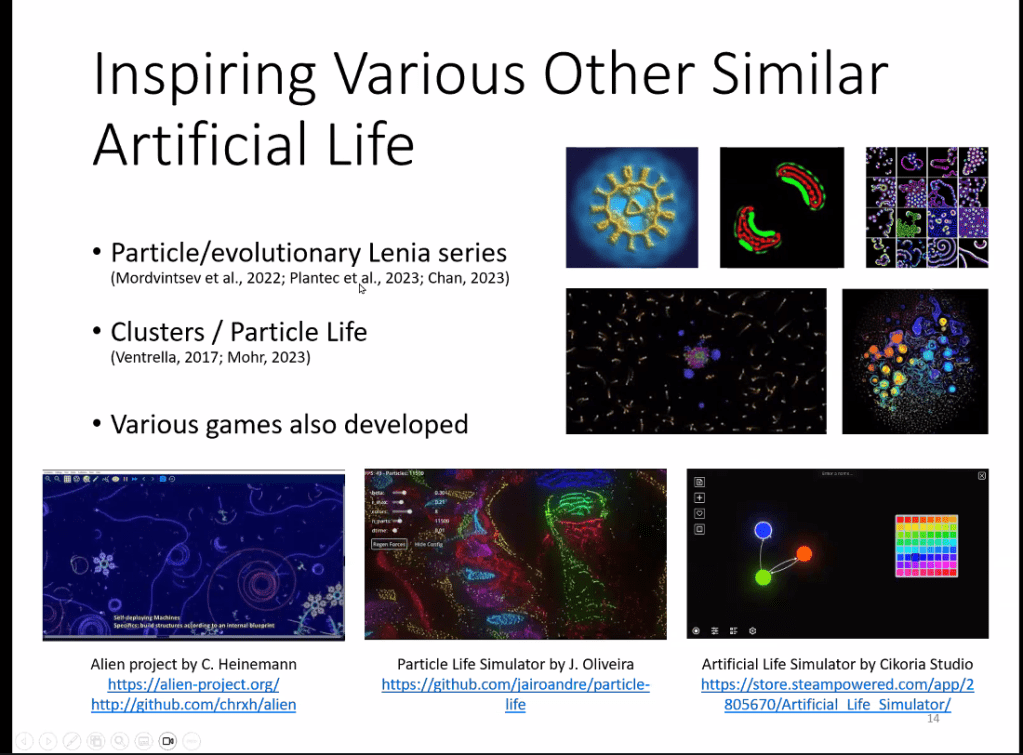

- Hiroki Sayama: DISTRIBUTED EMBODIED INTELLIGENCE IN ARTIFICIAL HETEROGENEOUS SWARMS

- Abstract: Artificial swarm systems have been studied extensively in robotics, computer science and artificial life. In particular, heterogeneous swarm systems that consist of a large number of relatively simple individual units can exhibit surprisingly rich macroscopic behaviors that often resemble sensing, cognition and ecological interaction of higher-order “organisms.” In this short talk, several cases of such emergent, distributed embodied intelligence in artificial heterogeneous swarms are introduced, using the Swarm Chemistry model and its variants as examples.

- Oliver Brock: IS EMBODIMENT THE ULTIMATE INDUCTIVE BIAS? (SPOILER: NO, IT IS MORE THAN THAT!)

- Abstract: Current machine learning wisdom would have you believe that “scale is all you need” to obtain artificial general intelligence (AGI) — whatever that might mean. But from biology we know that the body encoded many important inductive biases that make learning from data possible in the first place. What might these inductive biases be? I will talk about them in the context of in-hand manipulation and long-horizon manipulation problems. I will show that if you get the embodiment right (and that includes not just the robot but also the world) then the biases become so helpful that learning becomes trivial.

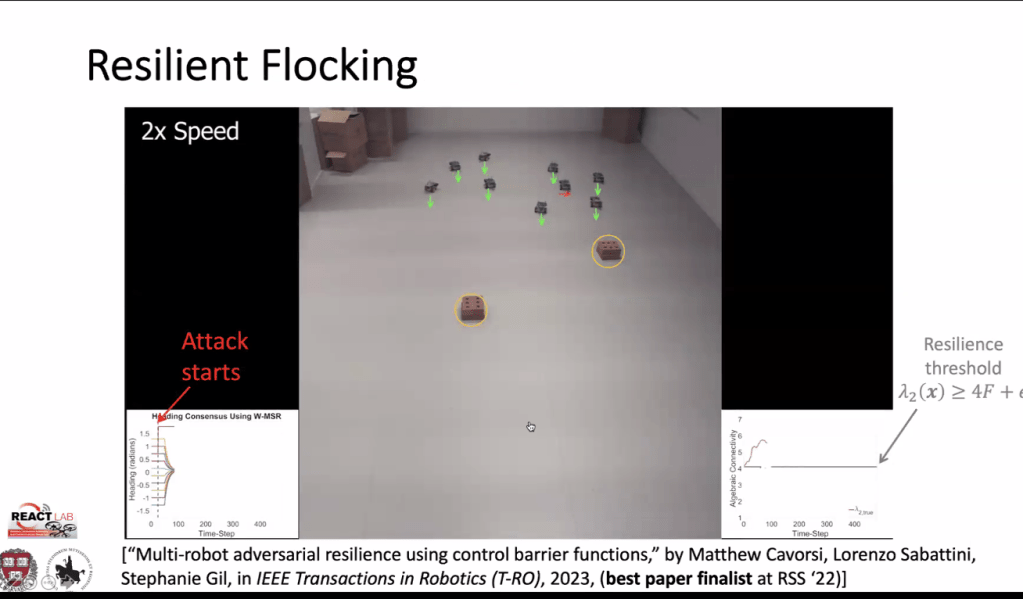

- Stephanie Gil: EXPLORING PHYSICAL EMBODIMENT FOR RESILIENCE AND SECURITY IN MULTI-ROBOT SYSTEMS

- Abstract: As multi-robot systems become increasingly common in applications such as autonomous vehicles, delivery drones, and search-and-rescue operations, ensuring the robustness of their coordination in the presence of unreliable communication and security threats is critical. This talk explores how control and information exchange can be leveraged to enhance situational awareness and resilience in these systems. Focusing on the consensus problem, we highlight limitations of classical approaches when facing a high proportion of malicious agents. We introduce a probabilistic framework based on stochastic observations of trust, where link reliability is modeled as a random variable. This approach enables strong performance guarantees, including consensus, even beyond traditional fault tolerance limits.

- PANEL DISCUSSION

CLICK ON IMAGES TO ENLARGE